Mutual Information

CaC

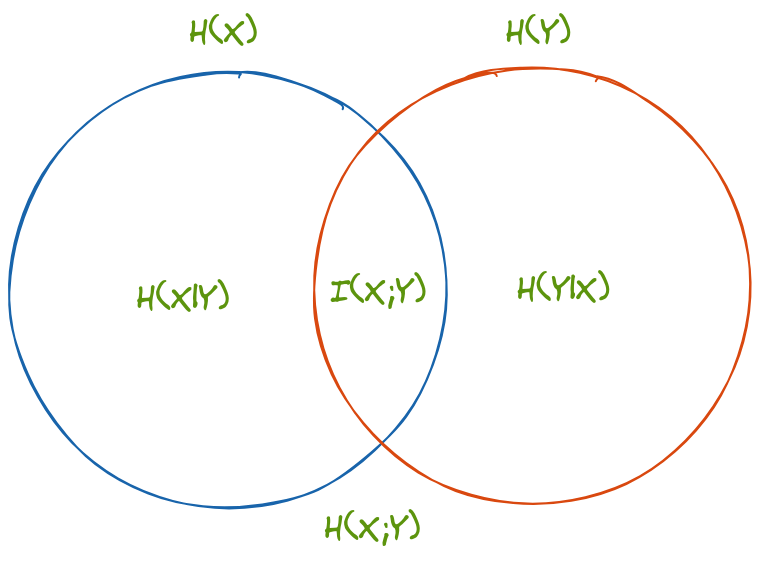

Venn Diagram of Entropy

$I(X,Y)$ is the mutual information

$H(Y|X)$ is the information Y provides given that X is known. This is expected to be less than $H(Y)$ as by having joint entropy, the value of X provides some information about Y.